Background

Once the usage for mileage tracking reached a critical mass, the business focus shifted from user growth and acquisition to user retention.

To define our product roadmap, my PM lead and I decided to do an audit of the E2E product experience, both from a user perspective and an internal process perspective.

We gathered usage metrics across onboarding, active usage and behavior patterns. I conducted a series of contextual inquiries with users, our product team and the customer success team to map the product experience and to identify areas of opportunities.

Role and contribution

As the design lead for mileage tracking, I conducted interviews with users, our engineers and care agents to create a service blue print that identified gaps in our online and offline experience, worked with our PM to prioritize areas of opportunities, pitched the idea for grouping trips to our data science team, and conducted a design sprint with the full mileage tracking team, which ended with an A/B test we could run in the product to test our hypothesis and a scalable design pattern that can be used for a variety of trip groups.

Team composition: Design lead (Me), 3 product designers, a PM lead, an engg lead and a data scientist.

Blueprint for the E2E mileage tracking experience

I used the learnings from the contextual inquiries, user reviews and behavior data to create a service blueprint that spanned across online and offline experiences. We were then able to go through it as a team and identify potential areas of opportunities.

We did a prioritization exercise to identify the areas of opportunities we needed to work on for the next year based on a combination of user feedback and business goals. At the end of the exercise, we landed at 3 areas of opportunities. For the rest of the year, I focused on the “Automate trip review process“ goal and directed the team to drive the other 2 areas.

Research and findings

In addition to the research done for the blueprint, I engaged our data science team to identify user behaviors based on the app usage pattern for the past year. I was able to summarize the learning into 3 findings:

People are habitual and visit locations repeatedly.

19% of all logged Business trips are repeat trips.

Around 29% of all tracked trips are left unreviewed.

Customer problem statement

As a QBSE mileage tracking user, I have to be super diligent in reviewing my trips because it is very easy to forget the purpose for these trips after a few days, and I’m scared of not being truthful in my mileage log and that makes me anxious and frustrated.

Grouping trips

There was already a way to create a rule to categorize trips in the app. But the usage was very low and one of the most common feedback we got from users was that it was cumbersome to go through a wall of trips, recognize them and then categorize them as Business or Personal. To address this problem, I began looking at ways to group trips based on commonalities between them.

Grouping explorations

Once I had the patterns down, I wanted to see, at a very high level, how groups can be expressed with the app. These are a few examples of how the grouping concept could scale for a variety of location and time based use cases. The goal here was to solve for 2 things: 1. To make it easy for users to understand why this group was created and 2. To let them take action on the whole group.

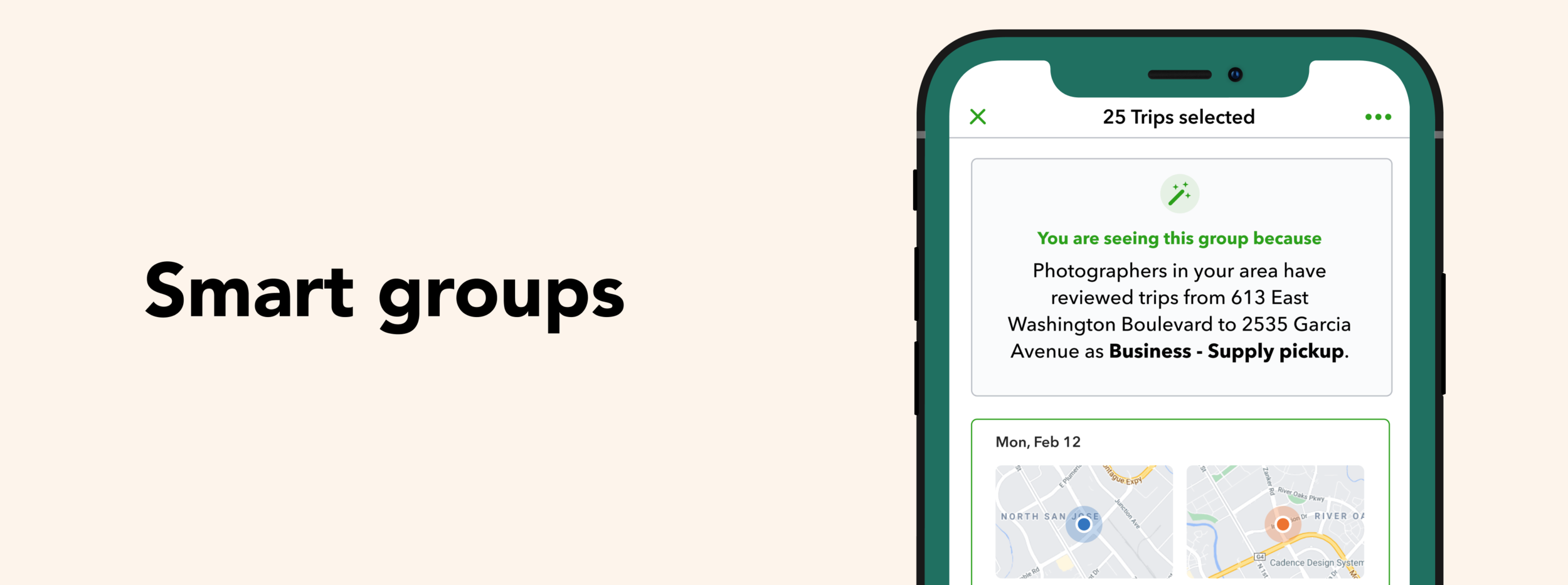

Smart groups

Smart groups use a machine learning algorithm we developed by working with Intuit’s data science team to analyze a user’s past driving patterns, their industry cohort driving patterns, information about the locations they visit and other variables like time of the day and day of the week to construct highly specific groups catered to that particular user.

Brainstorming for the group layout

Once I was sure that grouping use cases can be successfully expressed within the app experience, I conducted a brainstorming session with the design, product and engg team to come up with ideas for what the grouping layout could look like. At the end of the session, I was able to narrow down to a few concepts that I wanted to test with users.

Testing mileage groups

I worked with my data science partner to figure out a way to test groups. We landed on a 3 step approach:

Groups on the mileage home:

The first step was to test how the users would see the groups within the mileage home page in the app.

Layout of the group page:

The next step would be to test, the page the user lands on once they tap on a particular group from the mileage home page.

Accuracy and usefulness of groups:

This would be an in product A/B test, to test the accuracy of the grouping algorithm. This test was not dependent on the other 2 tests.

Testing the grouping components

In order to get unbiased and accurate results, I used our test participants’ real data to simulate what they would see in their app as much as possible. I tested 3 concepts for how the groups could be expressed in the mileage home page and 2 concepts on what the grouping page could look like. The intention behind these tests was to see what worked in each of these concepts.

Testing the grouping algorithm

To test the grouping algorithm, we ran an in product A/B test. To get unbiased results, we showed groupings in the app without any information about why trips were grouped together. We wanted our users to get into the group, look at the trips and then take an action on them. In background, the algorithm would’ve predicted how the users would review the trips beforehand. If the user action matches with the prediction, then the test would be considered a success for that user.

Scalability of the groups page

In the test for the groups page, layout 2 performed the best. This is a scalability study I did to make sure the pattern could scale and accommodate all grouping use cases.

Results

Layout 2 performed significantly better than Layout 1 as the user controls were more discoverable and easy to access.

Overall accuracy of predictions is ~87%.

Average overall review rate increased by 8%.

Unreviewed rate decreased by 10%.